Overview

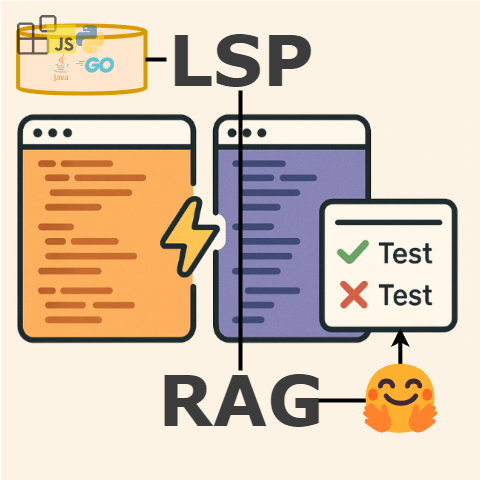

LSPRAG (Language Server Protocol-based AI Generation) is a cutting-edge VS Code extension that leverages Language Server Protocol (LSP) integration and Large Language Models (LLMs) to automatically generate high-quality unit tests in real-time. By combining semantic code analysis with AI-powered generation, LSPRAG delivers contextually accurate and comprehensive test suites across multiple programming languages.

✨ Key Features

🚀 Real-Time Generation

- Generate unit tests instantly as you code

- Context-aware test creation based on function semantics

- Intelligent test case generation with edge case coverage

🌍 Multi-Language Support

- Java: Full support with JUnit framework

- Python: Comprehensive pytest integration

- Go: Native Go testing framework support

- Extensible: Easy to add support for additional languages

🛠️ Installation & Setup

-

Download the extension named

LSPRAGInstall from the VS Code Marketplace or use Quick Open (

Ctrl+P) and run:ext install LSPRAG.LSPRAG -

Setup LLM in VS Code settings

Option A: VS Code Settings UI

- Open Settings (

Ctrl/Cmd + ,) - Search for "LSPRAG"

- Configure provider, model, and API keys

- For example, you can set provider as

deepseek, and model asdeepseek-chat, and you can also set provider asopenaiand model asgpt-4o-mini, orgpt-5.

Option B: Direct JSON Configuration

For example, add below settings to

.vscode/settings.json:{ "LSPRAG": { "provider": "deepseek", "model": "deepseek-chat", "deepseekApiKey": "your-api-key", "openaiApiKey": "your-openai-key", "localLLMUrl": "http://localhost:11434", "savePath": "lsprag-tests", "promptType": "detailed", "generationType": "original", "maxRound": 3 } } - Open Settings (

- You are ready!

Basic Workflow

1. Open Your Project

- Open your workspace in the new VS Code editor

- Or you can directly clone our project and move to its demo files:

git clone https://github.com/THU-WingTecher/LSPRAG.git - Navigate to the demo test files:

LSPRAG/src/test/fixtures/python - At Editor, click left-up

File→Open Folder→ Select workspace toLSPRAG/src/test/fixtures/python

[Optional] Test core utilities

- You can check out your current setting by calling

Cmd/Ctrl + Shift + P→LSPRAG: Show Current Settings - You can test your LLM availability by calling

Cmd/Ctrl + Shift + P→LSPRAG: Test LLM - You can test your Language Server availability by calling

Cmd/Ctrl + Shift + P→LSPRAG: Test Language Server

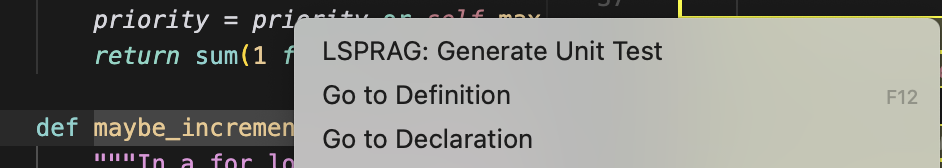

2. Generate Tests

- Navigate to any function or method

- Right-click within the function definition

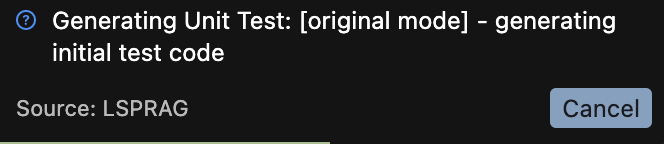

- Select "LSPRAG: Generate Unit Test" from the context menu

- Wait for generation to complete

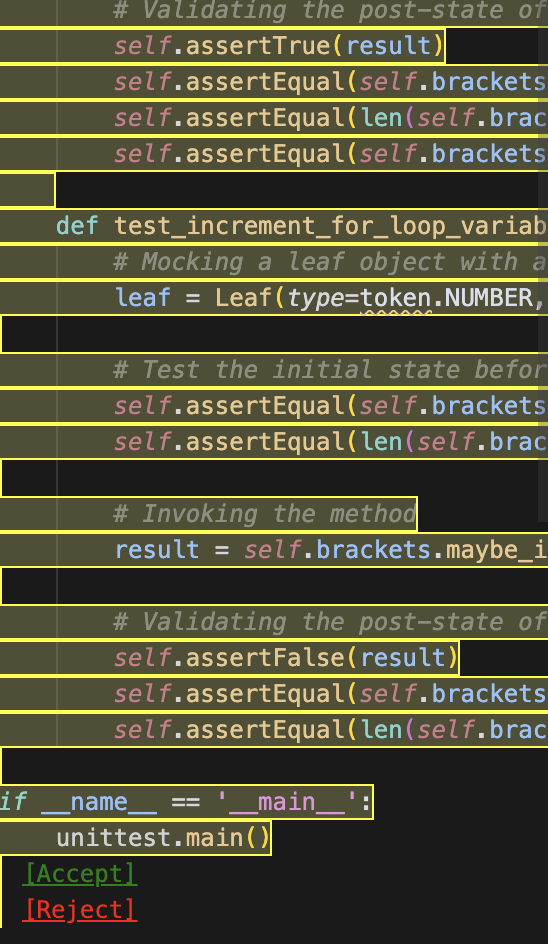

3. Review & Deploy

- Generated tests will appear with accept/reject options

4. Final Result

- All logs including LLM prompt and specific cfg, and diagnostic-fix histories will be saved under

{your-workspace}/lsprag-workspace/ - If you click [Accept] the test file, the test file will be saved at

{your-workspace}/lsprag-tests - You can change the save path by changing default value of save path. You can change it through VS Code Extension settings at the same interface with set up LLM.

Command Palette Commands

LSPRAG: Generate Unit Test- Generate tests for selected functionLSPRAG: Show Current Settings- Display current configurationLSPRAG: Test LLM- Test LLM connectivity and configurationLSPRAG: Test Language Server- Test language server functionality (symbol finding & token extraction)

📖 Getting Started & Contributing

New to LSPRAG? Want to contribute? We've got you covered!

- Quick Start Guide - Get up and running in 5 minutes. Perfect for first-time contributors!

- Contributing Guide - Comprehensive guide explaining the codebase, architecture, and how to contribute

Recommended path for new contributors:

- Start with Quick Start Guide to run your first test

- Explore test files in

src/test/suite/(ast, lsp, llm) - Modify and experiment with existing tests

- Read Contributing Guide for deep dive into architecture

🎯 Project Status

| Language | Status | Framework | Features |

|---|---|---|---|

| Java | ✅ Production Ready | JUnit 4/5 | Full semantic analysis, mock generation |

| Python | ✅ Production Ready | pytest | Type hints, async support, fixtures |

| Go | ✅ Production Ready | Go testing | Package management, benchmarks |

⚙️ Configuration

Core Settings

| Setting | Type | Default | Description |

|---|---|---|---|

LSPRAG.provider |

string | "deepseek" |

LLM provider (deepseek, openai, ollama) |

LSPRAG.model |

string | "deepseek-chat" |

Model name for generation |

LSPRAG.savePath |

string | "lsprag-tests" |

Output directory for generated tests |

LSPRAG.promptType |

string | "basic" |

Prompt strategy for generation |

LSPRAG.generationType |

string | "original" |

Generation approach |

LSPRAG.maxRound |

number | 3 |

Maximum refinement rounds |

API Configuration

DeepSeek

{

"LSPRAG.provider": "deepseek",

"LSPRAG.model": "deepseek-chat",

"LSPRAG.deepseekApiKey": "your-api-key"

}OpenAI

{

"LSPRAG.provider": "openai",

"LSPRAG.model": "gpt-4o-mini",

"LSPRAG.openaiApiKey": "your-api-key"

}Ollama (Local)

{

"LSPRAG.provider": "ollama",

"LSPRAG.model": "llama3-70b",

"LSPRAG.localLLMUrl": "http://localhost:11434"

}